This blog post is the third post in a series about how to get started to build a data architecture in the cloud. The architecture is described in the first blog post. This series features two people, Dana Simpsons the data scientist and Frank Steve Davidson the full stack developer, who are building their own SaaS company that will build games based on data science. In this blog post we will describe how you can access blob storage in Ruby. In the next blog post of this series you will learn how to use R scripts in Azure Machine Learning Studio.

Position in the architecture

All programs need data at some point. That data can either come from files or from a database. This is not different when your program lives in the cloud. In this case, it also might want to access data that is stored in the cloud. For Azure these files are stored in Blob Storage. For Frank and Dana's application from Dana and Frank, Frank will need to know how we will be able to access Blob Storage from Ruby. Because eventually you might want to store large pieces of information in the cloud, it is important to also again understand the cost.

How to get the needed pieces from the Azure Portal

To be able to access a piece of blob storage you will need to know the account name, the access keys and the container name. When you go to the Azure portal, you can select Storage accounts to get an overview of the storage accounts. When you click on one of the storage accounts you will get a view similar to the view below. This will help you to identify the pieces needed for your own example.

Initializing blob storage object from Ruby

When you want to access blob storage, make sure that you have the Azure gem installed. Next write require ‘azure’ at the the beginning of your script. When you have gathered all these pieces of information, you will be able to initialize your blob storage object and you will also be able to define your connectionstring.

List the content of your blob storage

The easiest way to list your content from a blob container is by using the Azure portal. You can find an example of this below.

Accessing the content from a container from Ruby however is also straight forward.

The first puts will just write the blob storage objects and the second puts will actually write down the file names like you can see below.

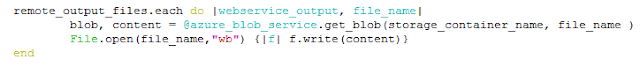

Downloading the files

Finally, it also might be the case that you want to download the files to a local drive or to another VM in the cloud. Below you can see the code for this.

Cost Analysis

Storage Cost

An overview of the blob storage prices can be found here. There is again a fixed cost and a variable cost for using blob storage. The fixed cost is just for hosting your data. The second cost is for accessing your data. The price for storing your data still depends on the amount of redundancy that you require and the amount of data that you are storing in blob storage. The last aspect is whether you want fast or hot access or slower or cool access to your data. All these different combinations, result in the price for storing your data ranging from 0.01 USD per gigabyte per month till 0.046 USD per gigabyte per month.

Access Prices

For accessing your data there are differences between the blob/block operations and data retrieval and data writes. For the blob/block operations the cost is counted in number of operations except for the delete which is free. The data retrial is counted in gigabyte. But it is interesting to notice here that data retrieval and data writing from hot data storage is free. Finally if you would want to import or export large amount of data, there are also options using Azure hard drive disks.

Conclusion

We have learnt more in this blog about using blob storage with Ruby and the costs that are involved in it. It is important to understand the usage pattern for your data to make the best decision about which type of blob storage to use. In one of the next blogs we will show how blob storage can be used to call the web service that Dana generated from Azure Machine Learning Studio.

I am very happy to visit your blog. This is definitely helpful to me, eagerly waiting for more updates.

ReplyDeleteData Science Training in Chennai

Data Science Course in Chennai

Data Analytics Courses in Chennai

Machine Learning Course in Chennai

Machine Learning Training in Chennai

RPA Training in Chennai

Data Science Training in Velachery

Data Science Course in Chennai

Great Article

ReplyDeleteData Mining Projects

Python Training in Chennai

Project Centers in Chennai

Python Training in Chennai

Borgata Hotel Casino & Spa | Jobs, Careers, & Reviews - KT Hub

ReplyDeleteSearch 세종특별자치 출장샵 Results For 충청남도 출장샵 "Borgata Hotel Casino & 여주 출장안마 Spa Jobs - 화성 출장안마 JTM 아산 출장마사지 Hub